Updated 2019-11-01 – This blog post is obsolete, please see Nutanix AHV Virtual Machine High Availability for most recent information.

Updated 2017-11-30 – To match new functionality in Acropolis.

—————————————————————————————————————————————

This is the second part of the Acropolis Virtual Machine High Availability (VMHA) blog series, you can find the first one here. This part will cover how it is determined when a virtual machine needs to be restarted on another Acropolis Hypervisor (AHV) host in the Nutanix Acropolis Cluster. The blog post will cover what happens when an AHV Host failed and when an AHV Host is network partitioned.

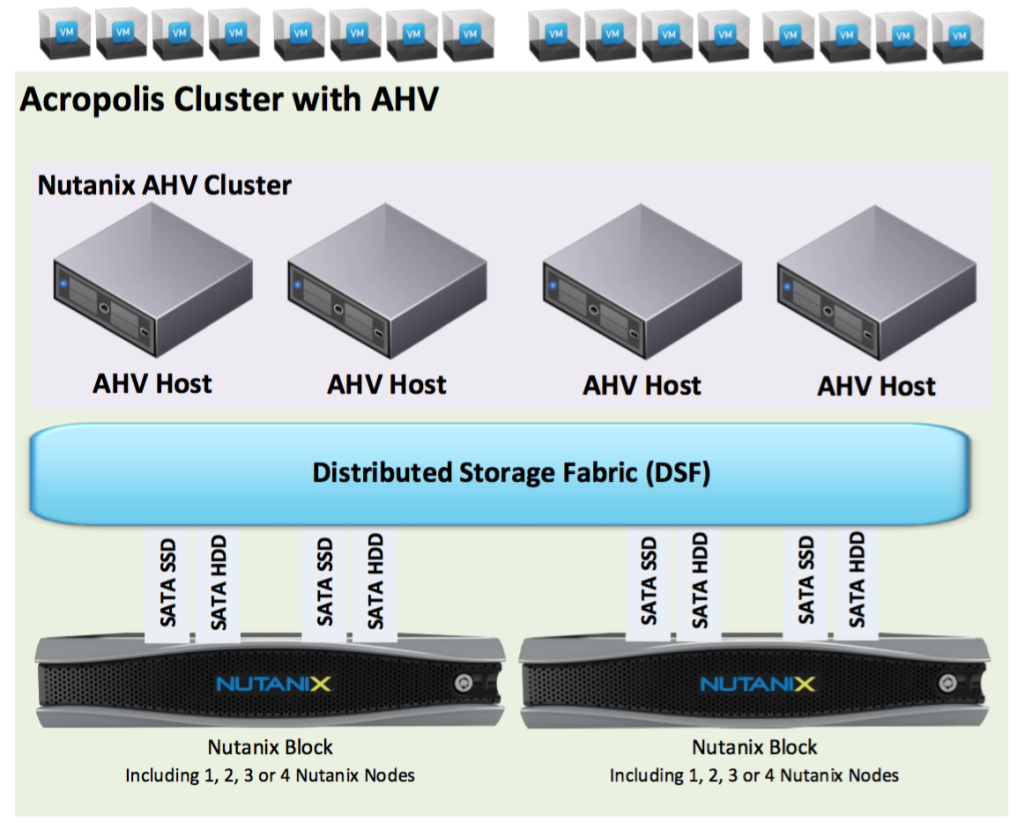

I’ll post the below picture in this blog post as well (was part of the initial VMHA blog post that you can find here) since it’s critical to understand the naming convention used for an Acropolis Cluster with AHV.

To understand what happens during an AHV Host failure or when an AHV Host is network partitioned we must first understand how the the health checks to determine if an AHV Host is up or down within the Nutanix AHV Cluster are conducted.

Acropolis, as described in this blog post, is a name of a framework including several features and we call the process delivering this framework for Acropolis.

Acropolis logical construct uses a master/ubordinate setup where one Controller Virtual Machine (CVM) is the master and the rest of the CVMs in the Nutanix AHV Cluster are subordinates.

A critical component involved in VMHA is the CVM Stargate process which is the process taking care of IO in the Distributed Storage Fabric (DSF). Read more about the Stargate process here.

Failure detection

There is an ongoing communication, once every second, between the Acropolis Master and each AHV Hosts libvirtd, which you can read more about here, process. We initiate the failover process when this communication fails and is not reestablished within 40-60 seconds depending on failure scenario.

Failure Scenario I – Acropolis Master is alive and a remote AHV host fails

| Time in sec | Normal operation meaning Acropolis Master can successfully complete the health checks against all remote AHV hosts libvirtd process. |

| T0 | Acropolis Master lose network connectivity to remote AHV host(s) libvirtd process. |

| T20 | Acropolis Master starts 40 second timeout. |

| T60 | Acropolis Master instructs all CVMs stargate processes to block IO from the AHV host the Acropolis Master cannot establish connection to. Acropolis Master waits for all remote CVM stargate processes to acknowledge the IO block. |

| T120 | All VMs are restarted. Acropolis Master is responsible for distributing the VM start requests to the available AHV hosts. |

Failure Scenario II – Acropolis Master is alive and a remote AHV host is network partitioned

The major difference between Failure Scenario I and Failure Scenario II is that the VMs on the AHV Host(s) that is network partitioned can actually run VMs.

However, since we deny the network partitioned AHV Host from accessing the VMs virtual disks the VMs will fail in the network partitioned AHV Host 45 seconds after the first IO failure. This means we will not end up with multiple copies of the same VM running.

| Time in sec | Normal operation meaning Acropolis Master can successfully complete the health checks against all remote AHV hosts libvirtd process. |

| T0 | Acropolis Master loose network connectivity to remote AHV host(s) libvirtd process. |

| T20 | Acropolis Master starts 40 second timeout. |

| T60 | Acropolis Master instructs all CVMs stargate processes to block IO from the AHV host the Acropolis Master cannot establish connection to. Acropolis Master waits for all remote CVM stargate processes to acknowledge the IO block. Since the VMs can’t make any progress on the network partitioned AHV Host since all IOs are blocked it is safe to continue. The VMs will commit suicide 45 seconds after the first failed IO request on the network partitioned AHV Host. |

| T120 | All VMs are restarted. Acropolis Master is responsible for distributing the VM start requests to the available AHV hosts. |

Failure Scenario III – Acropolis Master fails

The last scenario i want to describe is what will happen when the Acropolis Master fails. The failure can depend on the following:

- Failure of the CVM where Acropolis master runs.

- Failure of the AHV Host where the CVM including the Acropolis Master runs.

- Network partition of the AHV Host where CVM including the Acropolis Master runs.

| Time in sec | Normal operation meaning Acropolis Master can successfully complete the health checks against all remote AHV hosts libvirtd process. |

| T0 | Acropolis Master fails. |

| T20 | New Acropolis Master is elected on the available AHV hosts. |

| T60 | New Acropolis Master instructs all CVMs startgate processes to block IO from the AHV host where the original Acropolis Master lived. Acropolis Master waits for all remote CVM stargate processes to acknowledge the IO block. |

| T120 | All VMs are restarted. Acropolis Master is responsible for distributing the VM start requests to the available AHV hosts. |

Conclusion

Without enabling any feature at all your Acropolis Cluster with AHV is protected against an AHV Host or CVM failure. The only configuration required is if you need a guarantee that all VMs can be restarted in case of an AHV Host failure or not.

The time it takes before VMHA starts failed VMs because of an AHV Host failure or an AHV Host being network partitioned should not take more than 120 seconds. How long it takes before all VMs are up and running depends on number of VMs that needs to be powered on, number of powered on tasks per AHV Hosts and number of maximum parallel tasks in a Acropolis Cluster.