Updated 2019-11-01 – This blog post is obsolete, please see Nutanix AHV Virtual Machine High Availability for most recent information.

—————————————————————————————————————————————

This is the first blog in a series of blog posts for the Nutanix Acropolis Virtual Machine High Availability (VMHA) feature. This feature only comes into play when you run Acropolis Hypervisor (AHV) on your Nutanix nodes (physical servers).

Before i continue i would like to provide some terminology explanation:

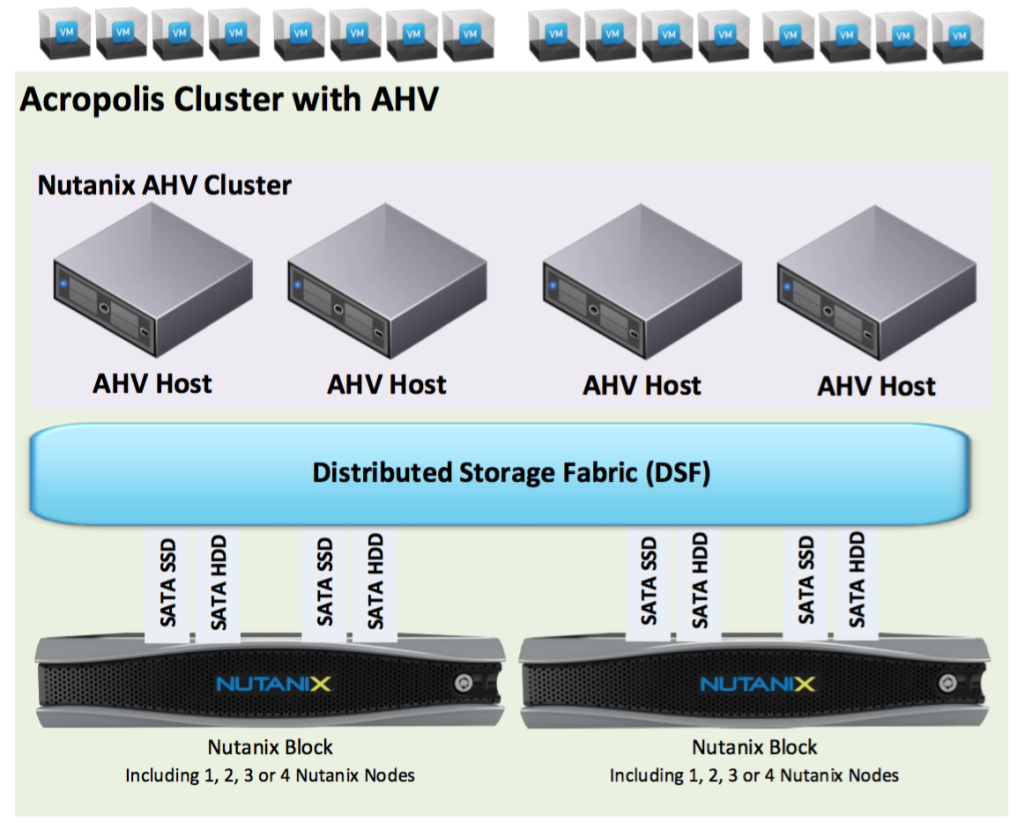

- Acropolis Cluster with AHV – Refers to the physical and logical elements required to run virtual machines such as:

- Physical servers called Nutanix nodes when not referring to a specific hypervisor running on the server.

- The storage layer called Distributed Storage Fabric (DSF) formerly known as Nutanix Distributed File System (NDFS)

- Hypervisor that can be Acropolis Hypervisor, ESXi or Hyper

- Nutanix AHV cluster – This term is used when referring to the logical grouping of AHV Hosts

- Acropolis Hypervisor (AHV) host – Refers to an individual server/Nutanix Node running the Acropolis Hypervisor software. Nutanix node is often used when talking about the server with no specific hypervisor running.

- Nutanix Node – Physical servers called Nutanix nodes when not referring to a specific hypervisor running on the server. In this case same as AHV Host.

- Nutanix Block – Refers to the 2U chassi where the Nutanix Node is placed. A Nutanix block can hold 1, 2, 3 or a maximum of 4 Nutanix nodes depending on node model.

VMHA ensures that failed VMs are started on another Acropolis Hypervisor (AHV) host in the Nutanix AHV cluster if a host and its corresponding VM fails. The feature also comes into play when there is a network partition and that will be discussed in a later blog post. VMHA is managed by the Acropolis master in the Nutanix AHV cluster and uses a master/subordinate architecture.

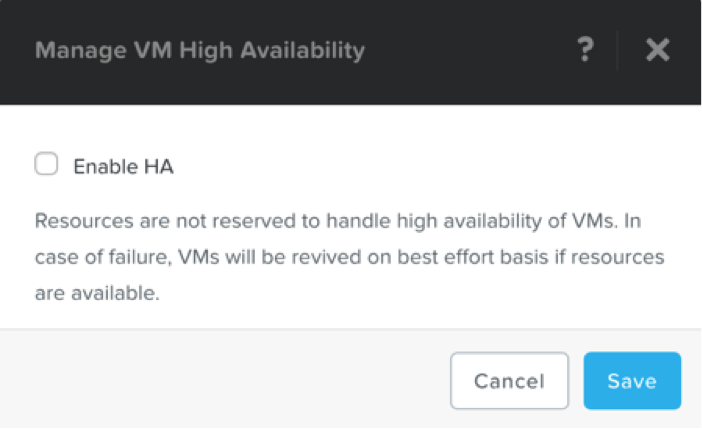

VMHA can be enabled via PRISM UI or Acropolis CLI (aCLI) where acli offers the most advance configuration options and VMHA is enabled automatically for you as soon as you create the AHV host cluster and there are two different modes:

- Default – This does not require any configuration and is the mode activated automatically when the Nutanix AHV cluster is created. When an AHV host becomes unavailable the failed VMs, previously running on the failed AHV host, are restarted on the remaining hosts if possible based on Nutanix AHV cluster available resources.

- Guarantee – This non-default configuration reserves capacity to guarantee that all failed VMs will be restarted on other AHV hosts in the Nutanix AHV cluster during a host failure. Use the Enable HA check box to turn on VMHA guarantee mode and start reserving capacity.

When using the Guarantee configuration the following resource protection will be applied:

- One AHV host failure if the DSF is configured for Nutanix Fault Tolerance 1 (Redundancy Factor 2).

- Up to two AHV host failures if the DSF is configured for Nutanix Fault Tolerance 2 (Redundancy Factor 3).

If needed, you can change the number of reserved AHV hosts using the following aCLI syntax:

- acli ha.update num_host_failures_to_tolerate=X

There are two different reservation types and the one that fits your Acropolis Cluster with AHV best will be automatically selected for you. The reservation types are:

- kAcropolisHAReserveHosts – One or more AHV hosts are reserved for VMHA.

- This reservation type will be automatically configured when the Nutanix AHV cluster consists of homogenous AHV hosts meaning hosts with same amount of RAM.

- kAcropolisHAReserveSegments – Resources are reserved across multiple AHV hosts.

- This reservation type will be automatically selected when the Nutanix AHV cluster consists of heterogeneous AHV host meaning host with different amount of RAM installed.

All VMs running in the Nutanix AHV Cluster will be protected by VMHA but there is an option to turn off VMHA on a per VM basis by setting a negative value (-1) using acli e.g.:

- acli vm.update ha_priority=-1

- acli vm.create ha_priority=-1

If the priority is changed it can be viewed by running the following command:

- acli vm.get VM-name

- Output will be presented at the end of the output and look similar to this:

- ha_priority: -1

Note: If configuration is not changed the VM will keep its default value of 0 and that is not presented in the “acli vm.get VM-name” output.

- ha_priority: -1

To respect data locality which is important for VM performance VMHA will make sure that VMs previously running on a failed AHV host will be migrated back to the host when it comes back online.

Take the following into account if/when you make any VMHA changes:

- Use the non-default VMHA mode Guarantee when you need to ensure that all VMs can be restarted in case of an AHV host failure. Otherwise, use the default, VMHA mode.

- When using the VMHA mode Guarantee always use the default VMHA reservation type of HAReserveSegments for heterogeneous Nutanix AHV clusters.

Important: Never change the VMHA reservation type for heterogeneous Acropolis Clusters with AHV. - Use the default VMHA configuration type HAReserveHosts for homogeneous Nutanix AHV clusters.

There might be circumstances when you consider/want to change the default reservation type, HAReserveHosts, for homogeneous clusters. In such case, keep the following in mind:

- Advantage of segments – If performance is critical, including maximizing local disk access and CPU performance.

- Advantage of hosts – The VM consolidation ratio is higher because fewer host resources are reserved compared to the segments reservation type.

4 pings