This blog post will be my personal summary of what was announced, discussed and presented during the Nutanix .Next conference at Wynn resort in Las Vegas 20-22 June 2016. It was a great event and i’m sure you’ll find the new stuff really exciting

The key message during the conference was all about Nutanix is the Enterprise Cloud Company that can provide you with all the necessary features and capabilities, not just hyper converged infrastructure (HCI), you need to build and manage your own elastic (public), predictable (private) or hybrid cloud solution.

The below is just information and not complete feature functionality description.

Run all your virtual and physical workload on Nutanix with ABS

All the Nutanix ADFS features are supported and new storage is added when the Nutanix cluster scales out so no client actions required. The built-in load balancing features removes the need for MPIO and ALUA. The following are a few use cases for ABS:

- When existing storage solution can be changed to Nutanix but not all physical servers are end of life.

- Windows clusters that requires SCSI-3 persistent reservations for shared storage storage.

- iSCSI for Microsoft Exchange Server.

- Shared storage for Oracle RAC environments.

- Windows Server Failover Clustering (WSFC).

- Shared storage for Linux-based clusters.

- Stand-alone physical servers with any of the above requirements.

- Licensing requirements that forces organisations to stay with physical servers connected to remote storage.

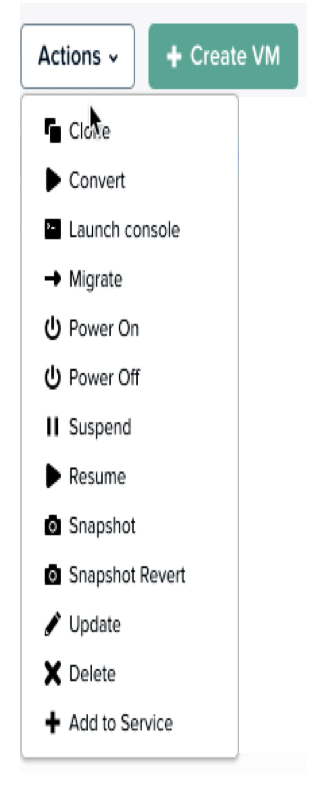

Self-Service

So there will be a built in way in the Nutanix solution to create Projects and assign users (via Active Directory/LDAP) and resources to the projects. The resources includes CPU, RAM, storage and network. This will help simplify deployment and delivery of both VMs and application. project admins will give access to specific images which the self service end users can deploy anytime based on their quota.

The Self-Service Projects are well known to people familiar with e.g. OpenStack and for people familiar with VMware technologies you can compare a Project with a vRealize Automation Tenant.

This is definitely something i’ll blog more about in the future so stay tuned.

AHV Improvements

Two major improvements was announced regarding AHV formerly known as Acropolis Hypervisor, which was released 18 months ago and in short it is a Nutanix hypervisor built on KVM. Integrated in PRISM Element are the VM, Network and Virtual Machine HA management but additional configuration capabilities were announced. In addition some behind the scenes improvements has been added.

Dynamic Scheduling Plus Affinity and Anti-Affinity Rules

The intelligent scheduling will proactively identifies both CPU and Storage contention and automatically, if/when needed, move VMs between the AHV cluster available AHV Hosts. The intelligent scheduling honors the scheduling rules.

Initial VM scheduling/placement meaning AHV places the VMs on an AHV host based on current utilisation has been available since AHV was GA 18 months ago. However, configuration options will soon be available for the following:

- VMs on same AHV Hosts – Useful for e.g. application isolation and when VM1 and VM2 are dependent on each other and communicates a lot. In that case by placing them on same AHV host the performance will increase.

- VMs on different AHV Hosts – For applikation availability purposes e.g. making sure domain controller VM1 and domain controller VM 2 never runs on same AHV host

- VM to AHV Host – This is good from e.g. a licensing perspective and in a mixed AHV cluster you can make sure VM x runs on AHV host Y where faster CPUs are available.

These configuration options are much like the vSphere Cluster DRS rules.

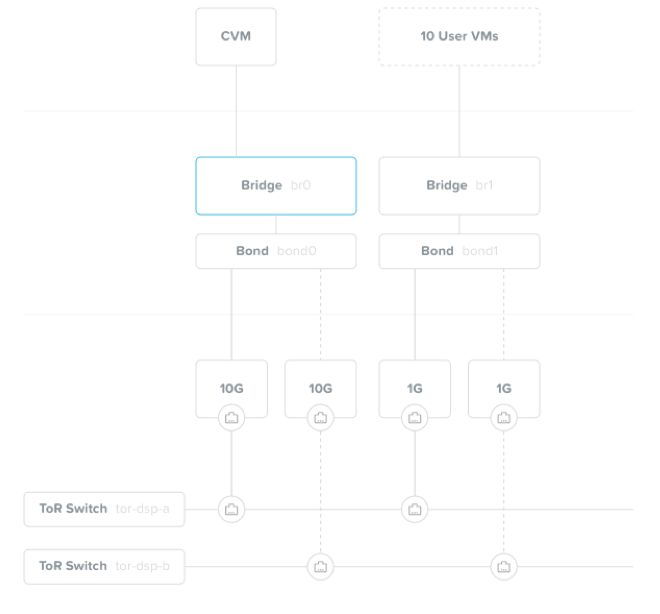

AHV Network Visualization

One thing that has not been exposed in the PRISM Element are the network connections between VMs, Bridges, Bonds and Physical switches. This has been solved and there is now a clear view of how the AHV network, build on Open vSwitch, comes together.

You will have a selection of multiple views and this particular screen shows Nutanix CVM and user VM network connection all way from OVS bridge, OVS bond to physical switch.

In addition to the layout the health of the connection, status of NICs, status of ports and VLAN misconfigurations will be shown. LLDP/CDP will be used to discover and validate the network topology and SNMP to get the configuration information from the switches

All flash extended capabilities

Nutanix have had their All-Flash array NX-9040 for quite some time now but now the all-flash capability has been extended to the NX-3000 series and is expected to be available on several NX G5 models in the future. With Nutanix data locality we make sure the VMs data is on the Nutanix node where it runs and this is really good for performance and also minimize network traffic. Data locality will become even more important when new flash technologies such as NVMe and 3D Point become mainstream since does not require much from these devices to make the network a bottleneck.

HCI to Containers via Acropolis Docker Services (ACS)

Manage ESXi based VMs via Nutanix PRISM

Maybe this is just the first step in bringing the vSphere management features into Nutanix AOS but i guess the future will tell.

Microsoft CPS Standard on Nutanix

Nutanix is Windows Server Hardware certified for 2012 and 2012 R2, Microsoft Private Cloud Fast Track validated, and a CPS Standard alliance partner. Microsoft CPS is based on Windows Server 2012 R2, System Center 2012 R2 and Windows Azure Pack. Microsoft CPS can be deployed via Nutanix PRISM Element to build an Azure-consistent hybrid cloud or. As an alternative a customer approach can be used to build a private cloud solution based on Windows Azure Pack, Windows Server and Hyper-V.